Peter Robicheaux 1† Matvei Popov1† Anish Madan 2

Isaac Robinson 1 Joseph Nelson 1 Deva Ramanan 2 Neehar Peri 2

Roboflow Carnegie Mellon University

† First authors

Introduced in the paper "Roboflow 100-VL: A Multi-Domain Object Detection Benchmark for Vision-Language Models", RF100-VL is a large-scale collection of 100 multi-modal datasets with diverse concepts not commonly found in VLM pre-training.

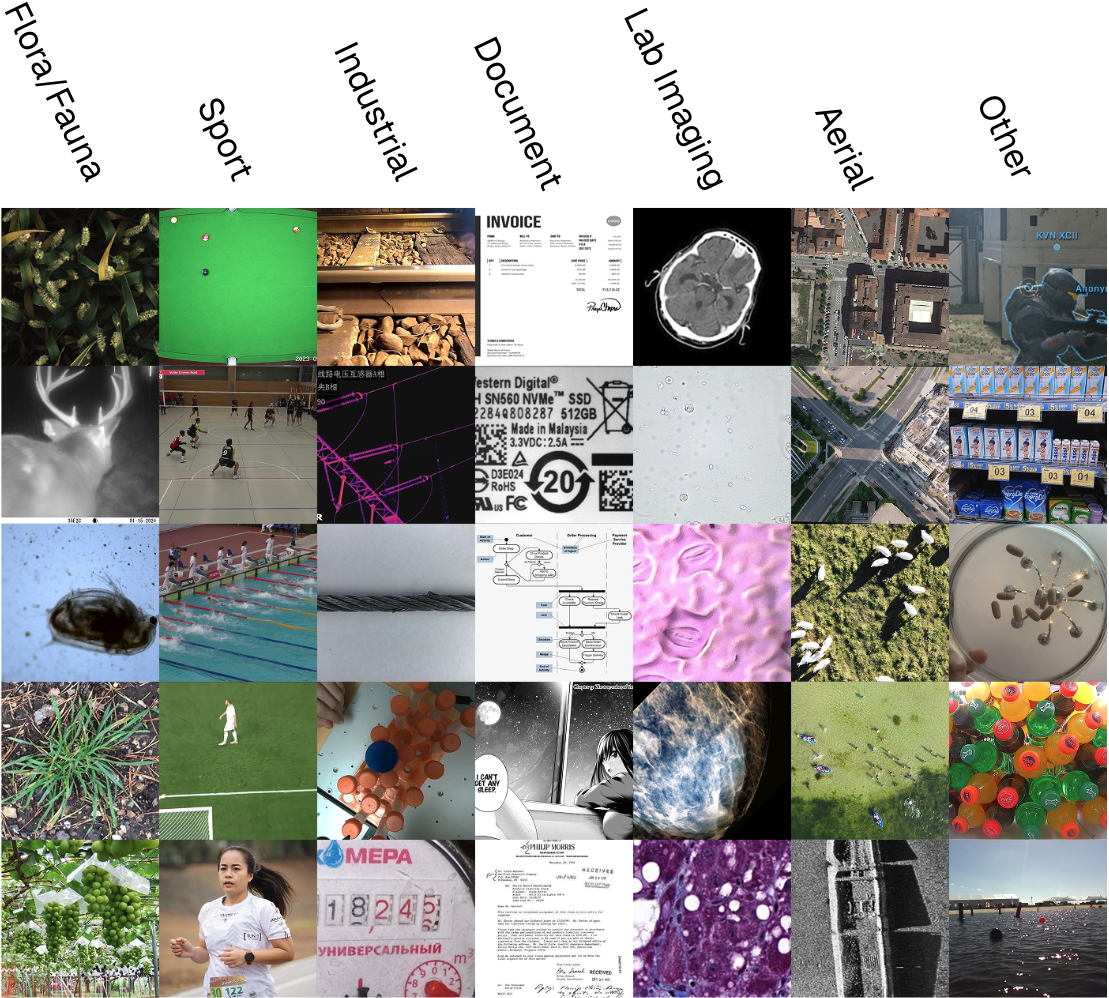

The benchmark includes images, with corresponding annotations, from seven domains: flora and fauna, sport, industry, document processing, laboratory imaging, aerial imagery, and miscellaneous datasets related to various use cases for which detection models are commonly used.

You can use RF100-VL to benchmark fully supervised, semi-supervised and few-shot object detection models, and Vision Language Models (VLMs) with localization capabilities.

To download RF100-VL, first install the rf100vl pip package:

pip install rf100vl

RF100-VL is hosted on Roboflow Universe, the world's largest repository of annotated computer vision dataset. You will need a free Roboflow Universe API key to download the dataset. Learn how to find your API key

Export your API key into an environment variable called ROBOFLOW_API_KEY:

export ROBOFLOW_API_KEY=YOUR_KEY

Several helper functions are available to download RF100-VL and its subsets. These are split up into two categories: functions that retrieve Dataset objects with the name of each project and its category. (that start with get_), and data downloaders (that start with download_).

| Data Loader Name | Dataset Name |

|---|---|

get_rf100vl_fsod_projects |

RF100-VL-FSOD |

get_rf100vl_projects |

RF100-VL |

get_rf20vl_fsod_projects |

RF20-VL-FSOD |

get_rf20vl_full_projects |

RF20-VL |

download_rf100vl_fsod |

RF100-VL-FSOD |

download_rf100vl |

RF100-VL |

download_rf20vl_fsod |

RF20-VL-FSOD |

download_rf20vl_full |

RF20-VL |

Each dataset object has its own download method.

Here is an example showing how to download the full dataset:

from rf100vl import download_rf100vl

download_rf100vl(path="./rf100-vl/")The datasets will be downloaded in COCO JSON format to a directory called rf100-vl. Every dataset will be in its own sub-folder.

Organized by: Anish Madan, Neehar Peri, Deva Ramanan, Shu Kong

This challenge focuses on few-shot object detection (FSOD) with 10 examples of each class provided by a human annotator. Existing FSOD benchmarks repurpose well-established datasets like COCO by partitioning categories into base and novel classes for pre-training and fine-tuning respectively. However, these benchmarks do not reflect how FSOD is deployed in practice.

Rather than pre-training on only a small number of base categories, we argue that it is more practical to download a foundational model (e.g., a vision-language model (VLM) pretrained on web-scale data) and fine-tune it for specific applications. We propose a new FSOD benchmark protocol that evaluates detectors pre-trained on any external dataset (not including the target dataset), and fine-tuned on K-shot annotations per C target classes.

We propose our new FSOD benchmark using the challenging nuImages dataset. Specifically, participants will be allowed to pre-train their detector on any dataset (except nuScenes or nuImages), and can fine-tune on 10 examples of each of the 18 classes in nuImages.

Goal: Developing robust object detectors using few annotations provided by annotator instructions. The detector should detect object instances of interest in real-world testing images.

Environment for model development:

- Pretraining: Models are allowed to pre-train on any existing datasets except nuScenes and nuImages.

- Fine-Tuning: Models can fine-tune on 10 shots from each of nuImage's 18 classes.

- Evaluation: Models ar 7B26 e evaluated on the standard nuImages validation set.

Evaluation metrics:

- AP: The average precision of IoU thresholds from 0.5 to 0.95 with the step size 0.05.

- AP50 and AP75: The precision averaged over all instances with IoU threshold as 0.5 and 0.75, respectively.

- AR (average recall): Averages the proposal recall at IoU threshold from 0.5 to 1.0 with the step size 0.05, regardless of the classification accuracy.

Output format: One JSON file of predicted bounding boxes of all test images in a COCO compatible format.

[

{"image_id": 0, "category_id": 79, "bbox": [976, 632, 64, 80], "score": 99.32915569311469, "image_width": 8192, "image_height": 6144, "scale": 1},

{"image_id": 2, "category_id": 18, "bbox": [323, 0, 1724, 237], "score": 69.3080951903575, "image_width": 8192, "image_height": 6144, "scale": 1},

...

]nuImages is a large-scale 2D detection dataset that extends the popular nuScenes 3D detection dataset. It includes 93,000 images (with 800k foreground objects and 100k semantic segmentation masks) from nearly 500 driving logs. Scenarios are selected using an active-learning approach, ensuring that both rare and diverse examples are included. The annotated images include rain, snow and night time, which are essential for autonomous driving applications.

We pre-train Detic on ImageNet21-K, COCO Captions, and LVIS and fine-tune it on 10 shots of each nuImages class.

- Submission opens: March 1st, 2025

- Submission closes: May 10th, 2025, 11:59 pm Pacific Time

- The top 3 participants on the leaderboard will be invited to give a talk at the workshop

- Zhou et. al. "Detecting Twenty-Thousand Classes Using Image-Level Supervision". Proceedings of the IEEE European Conference on Computer Vision. 2022

- Caesar et. al. "nuScenes: A Multi-Modal Dataset for Autonomous Driving." Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2020.

This work was supported in part by compute provided by NVIDIA, and the NSF GRFP (Grant No. DGE2140739).

The datasets that comprise RF100-VL are licensed under an Apache 2.0 license.