Update 05-09-20: All working well again. Onto getting it live.

Update 05-08-20: More or less functional again, with some rendering issues and lag.

Update 05-05-20: Unfortunately I've let this project lapse, so it is no longer live online. I am working to update and get it back up. Full time job and all. (shrug)

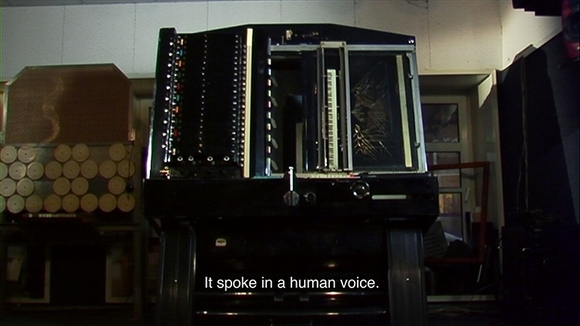

Welcome to Virtual ANS, a virtual model of the ANS Synthesizer, a Soviet era visual synthesizer which translates images into sound.

Click image to watch screencast demo of Virtual ANS on youtube:

I learned about the ANS Synthesizer during my MFA at Mills College and was immediately fascinated by its sonic possibilities. Since there is only one and it’s in Moscow behind velvet rope, I decided to create a virtual simulation that is accessible to anyone, as well as expanded in functionality. I’ve created a visual to represent the actual physical interface.

The Virtual ANS works on the same principle as the original ANS instrument, translating image to sound. On the vertical axis, there are 120 distinct sine tones that can be triggered simultaneously. On the horizontal axis, each pixel column is played over a set duration. When users upload an image or draw and save an image, it is converted to grayscale and analyzed by pixel column with Pillow. I am scaling the amplitude based on pixel value, with white pixels being the loudest, and black pixels being silent. CSS3 animation is used to simulate the action of the physical interface.

Users can select images from an existing library, upload images, or draw, save, and play an image using a provided canvas. By registering, users gain access to uploading private images, and have the ability to favorite images for easy access. I’ve used form type validation and bcrpyt for secure password encryption and storage. Uploaded images are moderated using the SightEngine API, which scans for and rejects images containing weapons, drugs, alcohol, and nudity, making the app accessible for users of all ages.