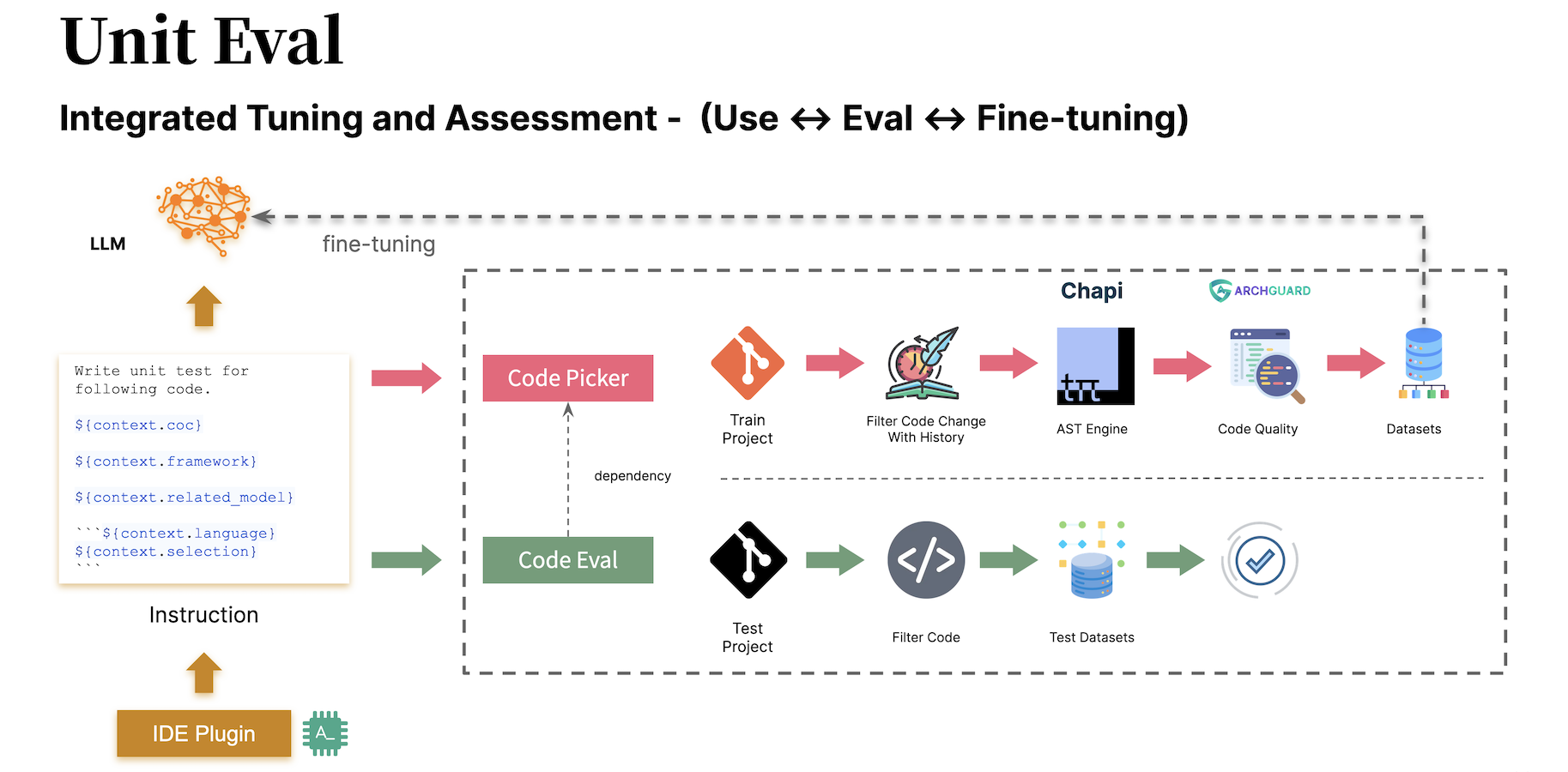

LLM benchmark/evaluation tools with fine-tuning data engineering, specifically tailored for Unit Mesh tools such as AutoDev, Studio B3, and DevOps Genius.

Docs: https://eval.unitmesh.cc/

Thanks to OpenBayes for providing computing resources.

Examples:

| name | model download (HuggingFace) | finetune Notebook | jupyter notebook Log | model download (OpenBayes) |

|---|---|---|---|---|

| DeepSeek 6.7B | unit-mesh/autodev-deepseek-6.7b-finetunes | finetune.ipynb | OpenBayes | deepseek-coder-6.7b-instruct-finetune-100steps |

| CodeGeeX2 6B | TODO | TODO | TODO |

Features:

- Code context strategy: Related code completion, Similar Code Completion

- Completion type: inline, block, after block

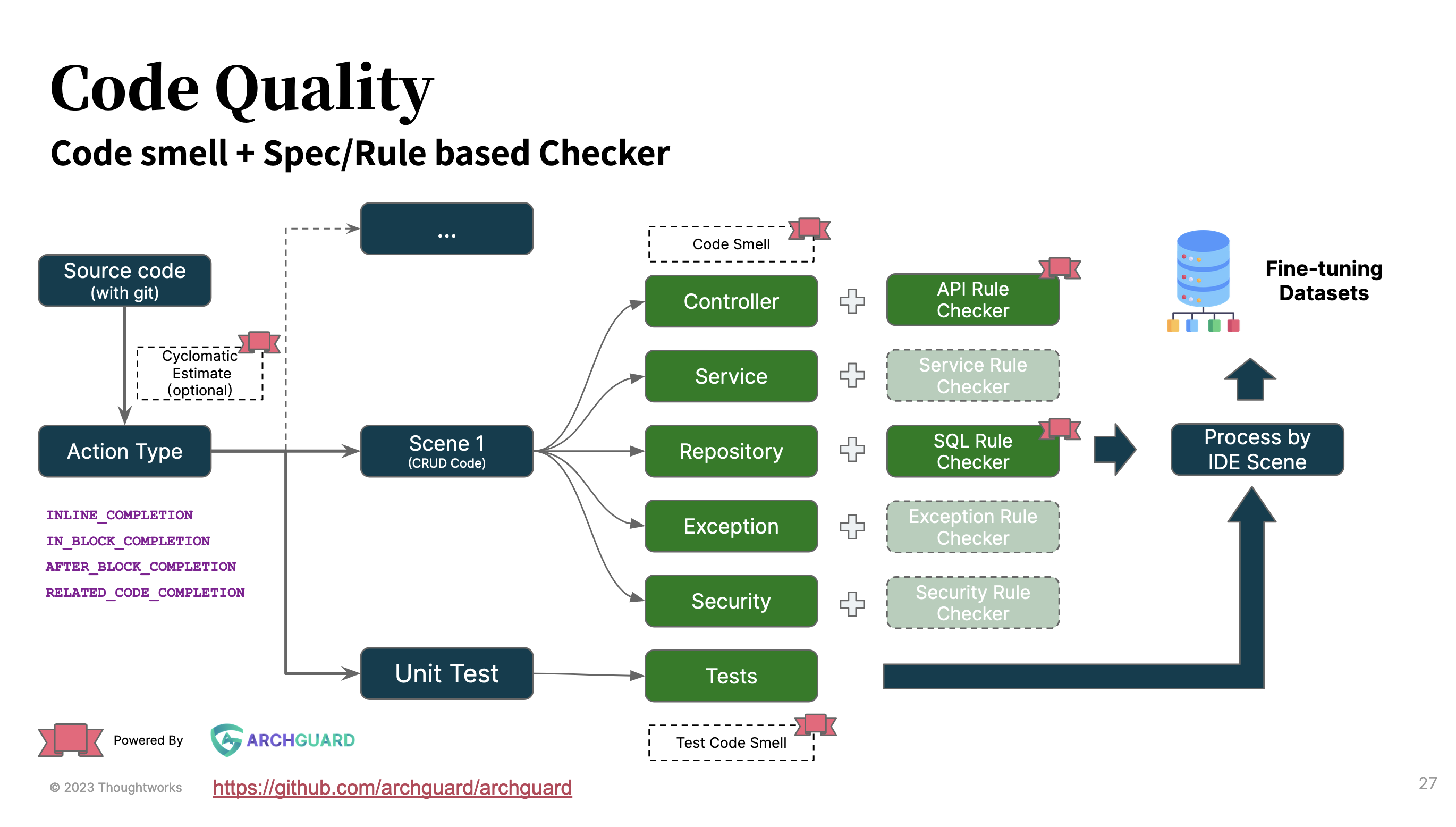

- Code quality filter and pipeline. Code smell, test smell, estimation nd more.

- Unique prompt. Integrated use of fine-tuning, evaluation, and tooling.

- Code quality pipeline. With estimate with code complex, bad smell, test bad smell, and more rules.

- Extendable customize quality thresholds. Custom rules, custom thresholds, custom quality type or more.

- FileSize: 64k

- Complexity: 1000

- config project by

processor.yml - run picker:

java -jar unit-cli.jar - abstract syntax tree: Chapi. Used features: multiple language to same data structure.

- legacy system analysis: Coca. Inspired: Bad Smell, Test Bad Smell

- architecture governance tool: ArchGuard. Used features: Estimation, Rule Lint (API, SQL)

- code database CodeDB. Used features: Code analysis pipeline

Keep the same prompt: AutoDev <-> Unit Picker <-> Unit Eval

AutoDev prompt template example:

Write unit test for following code.

${context.coc}

${context.framework}

${context.related_model}

```${context.language}

${context.selection}

```

Unit Picker prompt should keep the same structure as the AutoDev prompt. Prompt example:

Instruction(

instruction = "Complete ${it.language} code, return rest code, no explaining",

output = it.output,

input = """

|```${it.language}

|${it.relatedCode}

|```

|

|Code:

|```${it.language}

|${it.beforeCursor}

|```""".trimMargin()

)Unit Eval prompt should keep the same structure as the AutoDev prompt. Prompt example:

Complete ${language} code, return rest code, no explaining

```${language}

${relatedCode}

```

Code:

```${language}

${beforeCursor}

```

Before Check:

Optional quality type:

enum class CodeQualityType {

BadSmell,

TestBadSmell,

JavaController,

JavaRepository,

JavaService,

}Custom thresholds' config:

data class BsThresholds(

val bsLongParasLength: Int = 5,

val bsIfSwitchLength: Int = 8,

val bsLargeLength: Int = 20,

val bsMethodLength: Int = 30,

val bsIfLinesLength: Int = 3,

)Custom rules:

val apis = apiAnalyser.toContainerServices()

val ruleset = RuleSet(

RuleType.SQL_SMELL,

"normal",

UnknownColumnSizeRule(),

LimitTableNameLengthRule()

// more rules

)

val issues = WebApiRuleVisitor(apis).visitor(listOf(ruleset))

// if issues are not empty, then the code has bad smellfor examples, see: examples folder

see in config-examples

download the latest version from GitHub Release

1.config the unit-eval.yml file and connection.yml

2.run eval: java -jar unit-eval.jar

PS:Connection config: https://framework.unitmesh.cc/prompt-script/connection-config

see in config-example

1.add dependency

dependencies {

implementation("cc.unitmesh:unit-picker:0.1.5")

implementation("cc.unitmesh:code-quality:0.1.5")

}2.config the unit-eval.yml file and connection.yml

3.write code

public class App {

public static void main(String[] args) {

List<InstructionType> builderTypes = new ArrayList<>();

builderTypes.add(InstructionType.RELATED_CODE_COMPLETION);

List<CodeQualityType> codeQualityTypes = new ArrayList<>();

codeQualityTypes.add(CodeQualityType.BadSmell);

codeQualityTypes.add(CodeQualityType.JavaService);

PickerOption pickerOption = new PickerOption(

"https://github.com/unit-mesh/unit-eval-testing", "master", "java",

".", builderTypes, codeQualityTypes, new BuilderConfig()

);

SimpleCodePicker simpleCodePicker = new SimpleCodePicker(pickerOption);

List<Instruction> output = simpleCodePicker.blockingExecute();

// handle output in here

}

} This code is distributed under the MPL 2.0 license. See LICENSE in this directory.